MemoryMaker

In Beta

RAG application using vector embeddings for intelligent knowledge retrieval and search.

Date

2024-04

Duration

6 weeks

Team

solo

Difficulty

hard

Project Story

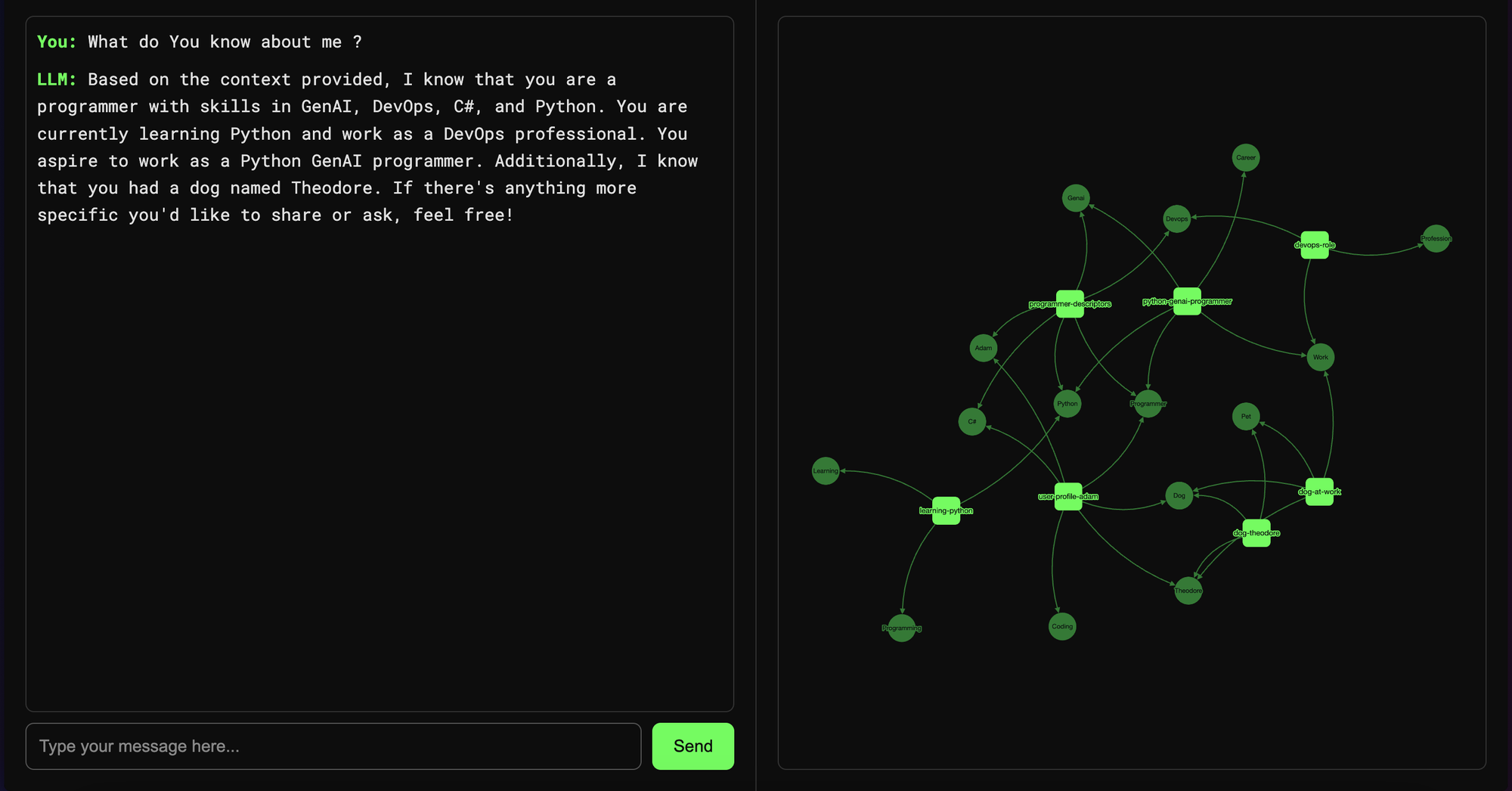

MemoryMaker explores the capabilities of Retrieval-Augmented Generation (RAG) systems by creating a knowledge base from documents that can be intelligently searched and queried using natural language.

Interface showing document upload and query capabilities

The system uses OpenAI embeddings for vector storage and FAISS for efficient similarity search, allowing users to ask questions about their documents and receive contextually relevant answers.

Technical Details

Tech Stack

Python OpenAI Embeddings FAISS Vector Database RAG

Key Features

✓ Document indexing

✓ Semantic search

✓ Natural language queries

✓ Context-aware responses

✓ Batch processing

✓ Memory management

Challenges Faced

⚠ VeCtor database management

⚠ Retrieval accuracy vs. response quality

⚠ Computing resource requirements

⚠ Document preprocessing complexity

Key Learnings

💡 Vector embeddings are powerful for semantic search

💡 RAG systems bridge gap between LLMs and private data

💡 FAISS provides excellent performance for vector search

💡 Document chunking affects retrieval quality

💡 Context relevance is key for useful answers